Modern businesses need a unified data platform to move faster with insights, reduce complexity, and scale AI initiatives making data-driven decisions reliable, timely, and truly impactful.

What is Databricks Lakehouse?

The Databricks Lakehouse is a modern data platform that combines the flexibility of data lakes with the performance and reliability of data warehouses. Built by Databricks, it allows organizations to store, process, analyze, and apply AI on all types of data in one place. Instead of managing separate systems for analytics, reporting, and machine learning, teams work on a single, unified data and AI platform. This matters because it removes data silos, simplifies operations, and accelerates innovation across analytics and AI use cases.

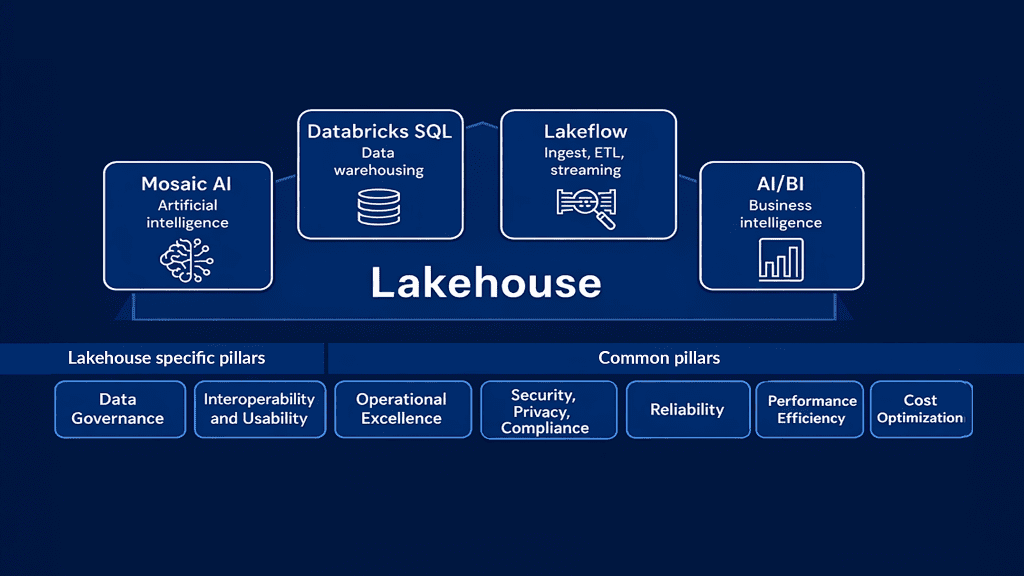

Databricks Lakehouse Architecture

The Databricks Lakehouse architecture is designed around a simple but powerful idea: keep data open, scalable, and accessible while delivering high-performance analytics and AI. At its core, the architecture is built on an open data lakehouse approach, where data lives in low-cost cloud storage and is governed by smart metadata and processing layers.

This architecture separates storage from processing power that allows businesses to scale independently based on workload needs. Structured, semi-structured, and unstructured data coexist in the same environment. Tools for SQL analytics, data engineering, and machine learning all operate on the same data foundation, ensuring consistency and collaboration across teams.

The foundational elements of the Lakehouse include:

- Cloud object storage as the data foundation

- Delta Lake for reliability, ACID transactions, and data quality

- Scalable compute for analytics and AI workloads

- Unified governance and security layer

- Native support for BI, data science, and engineering tools

How Databricks Lakehouse Enables AI and Analytics

Imagine a world where analysts and data scientists don’t waste hours moving data between systems. On Databricks, everything happens in one place: data preparation, exploration, and modeling flow seamlessly like chapters in the same story. No more copying files, no more delays, no more errors. Instead, teams work together on a single platform, turning raw data into insights faster than ever before.

The lakehouse platform for AI also supports real-time and batch analytics together. This makes it easier to train models on historical data and apply them instantly to streaming or live data, enabling smarter and faster business decisions.

Benefits for Machine Learning and Data Management

The Databricks Lakehouse simplifies how teams build, deploy, and manage AI solutions. It removes friction between data management and advanced analytics workflows.

This approach delivers various advantages such as,

- Faster model development with Databricks for machine learning

- A unified data analytics platform for SQL, Python, and ML

- Consistent data quality using Delta Lake

- Lower costs with scalable cloud storage and compute

- Better collaboration between analysts and data scientists

- Strong governance across the full data lifecycle

Enterprise Use Cases for AI and Analytics on Databricks

Enterprises use the Databricks Lakehouse to turn raw data into intelligent action. Its flexibility supports both operational and strategic workloads across industries.

Common Databricks Lakehouse use cases for enterprise AI include:

- Predictive maintenance in manufacturing

- Personalized recommendations in retail and media

- Fraud detection and risk scoring in finance

- Customer 360 analytics for sales and marketing

- Demand forecasting and supply chain optimization

- Real-time analytics for IoT data

- Natural language processing for customer support insights

Databricks Lakehouse vs Traditional Data Warehouses

The Databricks Lakehouse vs data warehouse for analytics comparison shows a shift from rigid, siloed systems to open and flexible platforms.

| Feature | Databricks Lakehouse | Traditional Data Warehouse |

| Data Types | Structured, semi-structured, unstructured | Mostly Structure |

| AI & ML Support | Native and integrated | Limited or external |

| Scalability | Elastic and cloud-native | Fixed and Expensive |

| Data Sharing | Open formats | Closed formats |

| Cost Efficiency | Optimized cloud storage | High storage costs |

The Lakehouse delivers more flexibility, better AI support, and lower complexity than traditional warehouses.

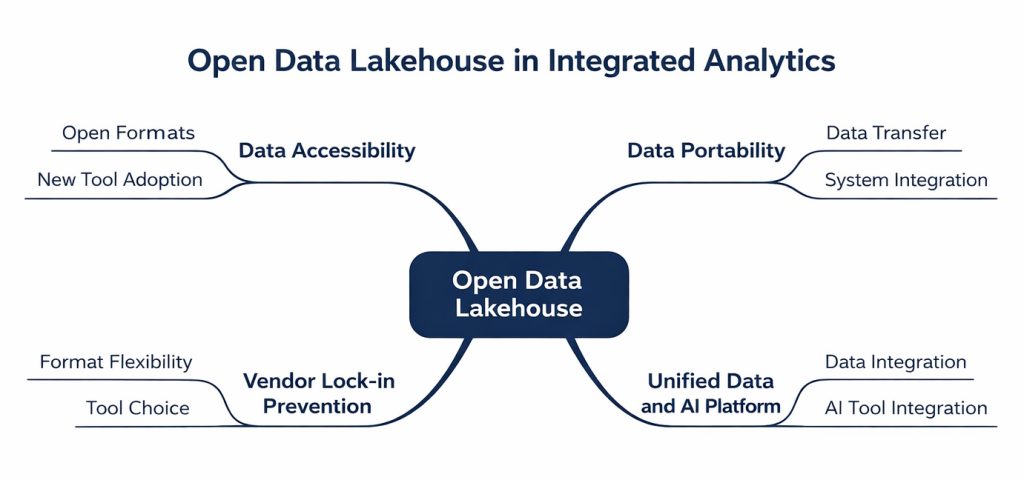

Role of Open Data Lakehouse in Integrated Analytics

An open data lakehouse plays a critical role in integrated analytics by keeping data accessible and portable. Open formats prevent vendor lock-in and allow organizations to adopt new tools while maintaining a unified data and AI platform.

Future of AI Innovation with the Lakehouse Platform

The future of AI is all about bringing data, analytics, and machine learning together on one platform. Lakehouse technology will drive real-time AI, smarter analytics, and intelligent applications. As businesses embrace generative AI and advanced models, the lakehouse will provide a reliable foundation that grows with their needs.

Conclusion

The Databricks Lakehouse is the next big thing because it brings data, analytics, and AI together on one powerful platform. It simplifies complex architectures and makes data management seamless. Businesses gain faster insights, better collaboration, and stronger AI capabilities. With open standards and cloud scalability, it supports both today’s analytics and tomorrow’s AI. Most importantly, it helps organizations move from data to decisions with confidence.

Real-world impact is already visible through this Databricks Lakehouse AI success story, showcasing how enterprises accelerate analytics and AI-driven outcomes.